llm-spec: Making LLM Parameter Compatibility Verifiable, Trackable, and Aggregatable

When integrating multiple LLM providers or building OpenAI/Anthropic/Gemini/xAI compatibility layers through your own gateway or proxy, the real challenge isn’t whether requests succeed—it’s knowing whether parameters are truly supported, whether behavior is consistent, and when something breaks, whether you have a clear evidence chain showing exactly which request, which parameter change, and what consequence followed.

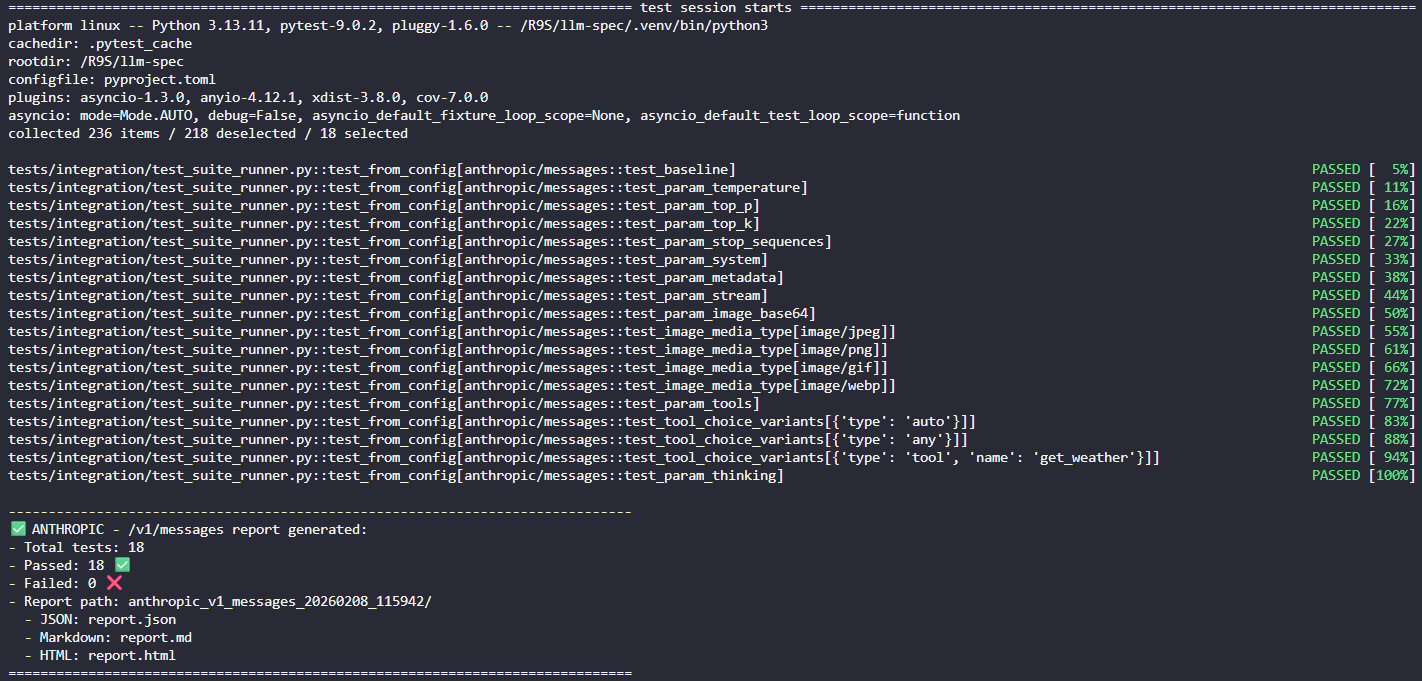

Today we’re introducing llm-spec, an open-source tool that transforms parameter compatibility from guesswork into configuration-driven automation with structured reporting. Evidence, not assumptions.

Get started with llm-spec on GitHub →

The Problem You Know Too Well

LLM integrations are complex. Temperature controls. Top_p settings. Response formats. Streaming configurations. Provider-specific extensions. When you’re building products on top of these capabilities, compatibility becomes mission-critical.

Consider a familiar scenario: you’ve integrated Anthropic’s API, expanded to support OpenAI, deployed your integration, and early tests pass. Then users report inconsistent behavior. Some temperature settings work while others don’t. Structured outputs occasionally fail. Streaming responses sometimes terminate prematurely. Without systematic verification, you’re debugging in the dark, unable to distinguish between upstream provider issues, your own implementation bugs, or fundamental protocol incompatibilities.

The problem intensifies with third-party LLM API vendors—aggregators, resellers, hosting platforms, and agent that provide their own implementations. These intermediaries may introduce subtle deviations from official specifications. When something goes wrong, the question becomes: vendor implementation issue, or did your request construction introduce the problem? The answer matters. Obtaining it systematically shouldn’t require custom tooling for every integration.

Why Traditional Testing Fails

Parameter compatibility testing is uniquely susceptible to misleading results:

- Intermittent failures from traffic fluctuations, rate limiting, gateway policies, or temporary model changes create false positives—teams waste days investigating transient conditions rather than genuine incompatibilities

- Intermittent successes mask deeper problems, leading teams to ship integrations that work in testing but fail in production

- Inconsistent error handling—some providers return 4xx, others 5xx, others return HTTP 200 with malformed structures—means tests miss critical failures

- Streaming responses require more than confirming chunks arrive—essential events must not be missing, termination events must be correct, and distinguishing between upstream bugs, proxy truncation, and timing issues manually is time-consuming and error-prone

These challenges call for experimental methodology, not production monitoring. Reproducible evidence, not anecdotal observations. That’s what llm-spec delivers.

Introducing llm-spec: Protocol Compliance as Code

llm-spec transforms parameter compatibility testing from ad-hoc processes into systematic, automated discipline. The core philosophy: control variables for precise attribution, structured validation for comprehensive coverage, aggregatable reporting for long-term tracking.

When you run llm-spec, you’re not getting pass/fail results—you’re building a knowledge base of provider capabilities that informs integration decisions, vendor evaluations, and regression testing.

The tool operates through JSON5 configuration files that define test suites for specific provider-endpoint combinations. Each suite specifies baseline parameters, test cases with parameter variations, response schemas for validation, and streaming rules for real-time verification. Configuration-driven means version-controllable, reviewable, reusable. No custom test code to maintain—just data files describing what you’re testing and what results you expect.

How llm-spec Delivers More Accurate Detection

1. Controlled Variables: Change One Thing at a Time

Every llm-spec test case follows the scientific method: establish a baseline confirming basic connectivity, then introduce individual parameter variations to isolate effects. When tests fail, you immediately attribute failure to the specific change rather than hunting through complex modifications. The configuration format makes this explicit and auditable.

2. Configuration-Driven Test Suites

Instead of writing Python test code, llm-spec uses JSON5 configurations that declare test scenarios:

{

provider: "anthropic",

endpoint: "/v1/messages",

schemas: {

response: "anthropic.MessagesResponse",

stream_chunk: "anthropic.AnthropicStreamChunk",

},

base_params: {

model: "claude-sonnet-4-20250514",

max_tokens: 128,

messages: [{ role: "user", content: "Say hello briefly" }],

},

tests: [

{ name: "test_baseline", is_baseline: true },

{

name: "test_param_temperature",

params: { temperature: 0.7 },

test_param: { name: "temperature", value: 0.7 },

},

{

name: "test_streaming_basic",

stream: true,

params: { stream: true },

test_param: { name: "stream", value: true },

stream_rules: {

min_observations: 1,

checks: [

{

type: "required_sequence",

values: [

"message_start",

"content_block_start",

"content_block_delta",

"content_block_stop",

"message_delta",

"message_stop",

],

},

{ type: "required_terminal", value: "message_stop" },

],

},

},

],

}This configuration tests baseline functionality, temperature parameter variation, and streaming behavior with full event sequence validation. The streaming rules declare complete event sequences ending with message_stop—ensuring providers deliver the full response lifecycle, not truncated streams.

3. Structured Response Validation: Beyond “It Works”

For each endpoint, llm-spec validates responses against Pydantic models. Non-streaming responses validate against schemas.response, streaming chunks against schemas.stream_chunk. When validation fails, reports include specific field paths that are missing or malformed—enabling rapid diagnosis of protocol deviations.

In vendor evaluation scenarios, define schemas based on official provider specifications and use them as executable compliance checks. Vendor missing a required field? Wrong type? llm-spec surfaces discrepancies immediately. Objective data for vendor assessment. Concrete evidence for negotiations.

4. Streaming Completeness Verification

Streaming APIs require specialized validation. llm-spec models streaming responses as sequences of observations with declarative checks:

- Required sequences: Essential event types appear in correct order

- Required terminal events: Streaming responses terminate properly

- Required any-of: At least one acceptable event type appears

- Required presence: Critical events aren’t missing

Automatically detect truncated streams, missing termination events, malformed chunk sequences. Transform complex streaming validation into maintainable, auditable configurations.

5. Aggregatable Reporting: From Point-in-Time to Knowledge Base

llm-spec outputs aren’t ephemeral logs—they’re structured artifacts designed for aggregation and long-term tracking. JSON, Markdown, and HTML formats provide both machine-readable data and human-readable summaries. Aggregate reports compile parameter support matrices across multiple endpoints.

These reports are capability baselines. Re-run after gateway changes, model upgrades, SDK updates. Diff immediately highlights what changed—proactive regression identification before users encounter issues. Accumulated reports create institutional memory of provider behavior.

Real-World Use Cases

Multi-Provider Alignment: Run identical tests against each provider, aggregate results into compatibility matrices. Common code paths for consistent parameters, isolated handling for provider-specific variations.

Gateway Pass-Through Verification: Confirm your gateway passes parameters through unchanged. Tests validate specific parameters arrive upstream unmodified. Reports as evidence of transparency.

Regression Testing After Provider Updates: Providers regularly update APIs, modify behaviors, adjust error handling. After changes, run llm-spec to identify affected parameters, changed structures, broken streaming sequences. Automated verification, not manual investigation.

Third-Party Vendor Auditing: Evaluate new vendors with tests based on official specifications. Deviations flagged explicitly. Objective data for assessment. Concrete evidence for escalations.

What the Reports Show

llm-spec reports prioritize actionable insights:

| param | variations | req_status | resp_status |

|---|---|---|---|

max_tokens | - | ✅ | ✅ |

messages[0].role | - | ✅ | ✅ |

messages | - | ✅ | ✅ |

model | - | ✅ | ✅ |

messages[0].content | - | ✅ | ✅ |

temperature | - | ✅ | ✅ |

top_p | - | ✅ | ✅ |

top_k | - | ✅ | ✅ |

stop_sequences | - | ✅ | ✅ |

system | - | ✅ | ✅ |

metadata | - | ✅ | ✅ |

stream | - | ✅ | ✅ |

image.source.data | - | ✅ | ✅ |

image.source.media_type | image/jpeg | ✅ | ✅ |

image.source.media_type | image/png | ✅ | ✅ |

image.source.media_type | image/gif | ✅ | ✅ |

image.source.media_type | image/webp | ✅ | ✅ |

tools | - | ✅ | ✅ |

tool_choice | {'type': 'auto'} | ✅ | ✅ |

tool_choice | {'type': 'any'} | ✅ | ✅ |

tool_choice | {'type': 'tool', 'name': 'get_weather'} | ✅ | ✅ |

thinking.type | - | ✅ | ✅ |

Three failure categories immediately point to debugging paths: request-level (HTTP 4xx/5xx, gateway or proxy issues), structure-level (successful requests with malformed responses), streaming-level (successful streams with missing events).

{

"provider": "anthropic",

"endpoint": "/v1/messages",

"test_summary": { "total_tests": 12, "passed": 9, "failed": 3 },

"errors": [

{

"test_name": "test_param_response_format",

"type": "http_error",

"status_code": 400,

"error": "HTTP 400: {\"error\":{\"message\":\"Unsupported value: json_schema\"}}"

},

{

"test_name": "test_streaming_basic",

"type": "validation_error",

"status_code": 200,

"error": "Missing required stream events: message_stop, terminal:message_stop"

}

]

}Decomposed, actionable conclusions: response_format.type failed at request level with HTTP 400; streaming succeeded at request level but failed validation due to missing terminal events. Debugging becomes verification.

The Roadmap

More providers. More endpoints. Audio, image, video multimodal streams. Instability detection through repeated sampling and cross-run comparison. Reporting platform for team-level capability dashboards. Integration with observability platforms. CI/CD pipeline plugins. Side-by-side vendor comparisons.

Protocol compliance as first-class concern in LLM integration. Visible. Trackable. Continuously verified.

Get Started Now

Building multi-provider integrations? Compatibility layers? Gateway products?

Try llm-spec. Incorporate it into your regression testing workflow.

Replace “seems to work” with verifiable evidence.

Replace manual packet capture with configuration-driven automation.

Replace guesswork with systematic compliance verification.

The tool is open source. Check the repository for documentation, example configurations, contribution guidelines. Feedback, contributions, and use case discussions welcome.

The future of LLM integration is verifiable. Make it yours.

llm-spec is developed and maintained by our AI Model Service Platform team. Building tools that help developers ship reliable AI applications faster.